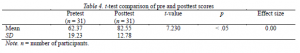

Is this a real study or Is this just fantasy?

I’m caught in a landslide of readings, with no escape from reality.

But it helped me open my eyes, and look up to the future and see.

I’m just a poor science teacher, I need some sympathy.

Because summer came and is about to go, the stress pretty high, not very low.

Any way this blog goes doesn’t really matter to me, because it’s about me.

As the end of the semester is in sight, I feel this image is an accurate representation of how I felt before and after this journey.

Appreciating Research – as a Scholar

Looking at the whole cartoon, it symbolize the need to examine research (characters) in detail to understand the findings (the joke). My background in sciences presented a biased view that published research articles have reliable findings because it made it through peer-reviews that would have pointed out flaws in its design. Yet McAteer (2013) pointed out examples in their article where researchers deliberately selected data which support their hypothesis or manipulated their data to produce a favorable result. Combined with the infamous Wakefield (1998) study that ‘linked’ measles, mumps, and rubella vaccinations to autism, I had to re-evaluate my belief in published articles.

O’Cathain’s (2010) proposed framework to assess the quality of mixed method research is perhaps the most detailed criteria list I’ve encounter in this course. However, I favored the Mixed Method Appraisal Tool to be more concise and easier to apply. Another useful tool is Boote and Beile’s (2005) literature review rubric, which helps readers assess whether the researchers have a full understanding of the terminology and current understanding in their field of research; as opposed to following standard methodology without comprehension its necessity. In previous blog posts (here and here), I applied those tools to articles after an initial reading and found changed my perception of the research afterwards. In the former case I questioned the validity of the findings due to vagueness in the report, and the latter lacked a broader inspection in their literature review. Going forward, I have a better appreciation for reading research articles which directly translates into a better understanding for teaching the scientific method as a science teacher.

Appreciating Research – as a Teacher

As educators, we receive a lot of resources and workshops on how to improve our practices. Being caught up in the energy of presentation and potential to improve our classes, it is tempting to implement innovations immediately. However, I believe we need to examine those ideas much like how we examine research and its findings. For example, we discussed how classes can incorporate usage of social media such as Twitter or blogs to foster student relations and interactions; and saw how it was successful in its implementation and purpose in our own Masters’ cohort. However, it is important to have reservations about re-structuring our own classes to model this without doing prior research such as seeking administrative or parental approval. Both those parties, and teachers themselves, need to keep the safety of the students first, thus require examination of whether those services adhere to the Freedom of Information and Protection of Privacy Act (FOIPPA). After looking into those aspects, there is still another important party to consider: the students. In the last school year, I experimented with incorporating school district’s Idea-X challenge into my Applied Design, Skills and Technology (ADST) class. The challenge aligned with the course’s curricular goals, so I let the class decide whether to pursue this at the start of the second semester. The school approved it, my students were interested, and parent consent forms were submitted; everything was on-track until the first information session. The organizers asked each team to create a group Instagram account, a social media service I know most of my students use. Their motivation dropped faster than Facebook share prices in 2018 and some even avoided class.

Given that I saw students twice a week and have taught them for roughly three months back then, I sorely underestimated how well I knew my class. Their unwillingness to have a digital presence outside of their closed circle, combined with some unclear expectations of the group account, made the whole exercise flop despite my attempts to offer social media support. Looking back now, rather than treating that experience as a one-off occurrence or giving up, the source of error from not researching deeper about my students’ comfortability with social media will be a key consideration for future activities. I also feel better about my decision now in framing my willingness to drop the project as exemplifying learner-centered pedagogy. as opposed to insisting upon it because it meets curricular competencies.

Open Mindset – as a Researcher

Looking back at the first comic, I see myself as the mouse in the corner making fun of non-traditional (constructivism) approaches to research. This meant that I placed a greater significance on studies that quantify and directly prove causation than those describing social observations and analysis, which might be open to interpretation. This mindset changed from two realizations: (i) quantitative approach being unsuitable for social research, and (ii) other methodology being equally as rigorous as quantitative studies. The second aspect was heavily influenced by Onwu and Mosimege (2004), where they clearly answered how oral practices in traditional medicine is subject to the same replicability that is expected in the scientific method; lack of empirical documentation should not make it less valid than Western science (discussed in previous blog). To wean myself off the superfood that is quantitative research, I began exploring a more balanced diet such as mixed methods (includes familiarity of traditional positivism and feasibility of constructivism in social research), and action research (blending of education theory and practice). As of now, action research seems to be the ideal path forward as it focuses on improvement of practice, which is essential to myself as an educator and one of two main reasons that I enrolled in the Master’s program.

Open Mindset – as a Teacher

I have always viewed myself as a flexible teacher in being open to new technology, pedagogy, and student suggestions, but I still find moments like the researchers in the first cartoon where I simply use modern technology to do the same thing as before. It struck me like thunderbolt and lightning, and was very, very frightening that I tossed a pile of notes at the students and expected them to regurgitate it on an exam before the end of the year. My main goal over the summer break was to spare them their life from this monstrosity and look for more engaging ways to connect the content to conversations or critical reflections. I also had to figure out what Aboriginal education is, and how to include it into classes as part of BC’s new curriculum. Luckily, the course readings have shown me that each of these individual ideas are interconnected, and not like Beelzebub has these tasks put aside for me, and just for me. Firstly, the availability of resources online is not exclusive to online and blended courses, but rather a movement towards open pedagogy in education. Students in face-to-face classes can also access these resources, such as online textbooks and video sites, providing a breadth of available material. Open access allows teachers and students to engage in learner-centered pedagogy and not be restricted by the availability of resources or expertise. The latter point involves students (or through teacher moderation) connecting with specialists in field via social media or simple email, to address their curiosity. One example of this being done effectively is at the Pacific School of Innovation and Inquiry, as mentioned by Jeff Hopkins in our meetings. These interactions may also serve as a learning opportunity for students regarding professional digital citizenship, such as curating and communicating with individuals online; a necessary skill that I might be able to model in my quest in writing a research project. Turning to course design, a correctly scaffolded model of this personalize inquiry would circumvent the issue of online education being “low context so that it can be consumed by any user, anywhere” (Tessaro, Restoule, Gaviria, Flessa, Lindeman, and Scully-Stewart (2018). Finally, all these parts can be woven under the banner of Indigenous pedagogy, whereby focus of learning is through conversation (be it synchronous or asynchronous) as opposed to assembly-line construction without seeing what the final product will be. It turns out my summer homework will be less strenuous under a natural, holistic lens rather than compartmental; something students may notice when examining their own learning under this model.

But before tackling these ideas for the upcoming school year, I need to look after my own health and wellness. So for now:

Nothing really matters, everyone can see my blog.

Nothing really matters

Nothing really matters to me~