Background Information about the Article

Ever since the British Columbia (BC) modernized it’s K-12 curriculum and began implementing it in 2016, teachers have been re-visiting their pedagogies to shift from content-focused teaching to inquiry-based or problem-solving approaches. As a high school educator who teaches both blended- and distance-learning classes, I have been looking for models for online learning spaces where students work collaboratively. The article I chose, “Wikis for a Collaborative Problem-Solving (CPS) Module for Secondary School Science”(DeWitt, Alias, Siraj, & Spector, 2017), used Wiki pages as their platform for students’ online collaboration. Their research looked at three aspects: (1) what types of interactions occurs on the Wiki in the context of learning science, (2) what extent does CPS in a wiki encourage social and cognitive processes, and (3) what extant does the CPS module improve achievement (DeWitt et al., 2017). Delving into the background of this study, I found that DeWitt, Alias, and Siraj are associate professors from the University of Malaya, Malaysia. In addition, DeWitt herself had previously worked for Malaysia’s Ministry of Education’s Educational Technology Division; according to her biography. The literature supporting this study stems from a report by Ministry of Education of Malaysia (2013) finding that mathematics and science achievements in the country have declined in the past few years, and that “few studies have examined online collaboration and problem-solving in science” (DeWitt et al., 2017). The population which this article samples from are 31 volunteers from an urban high school in Malaysia which includes students of varying academic strengths, reflective of the multiracial community, as well as simple convenience for the researcher. Their findings could useful for fellow researchers, teachers, and policy makers in Malaysia to consider future direction of research, classroom implementation, or curricular development.

How the Research was Carried Out

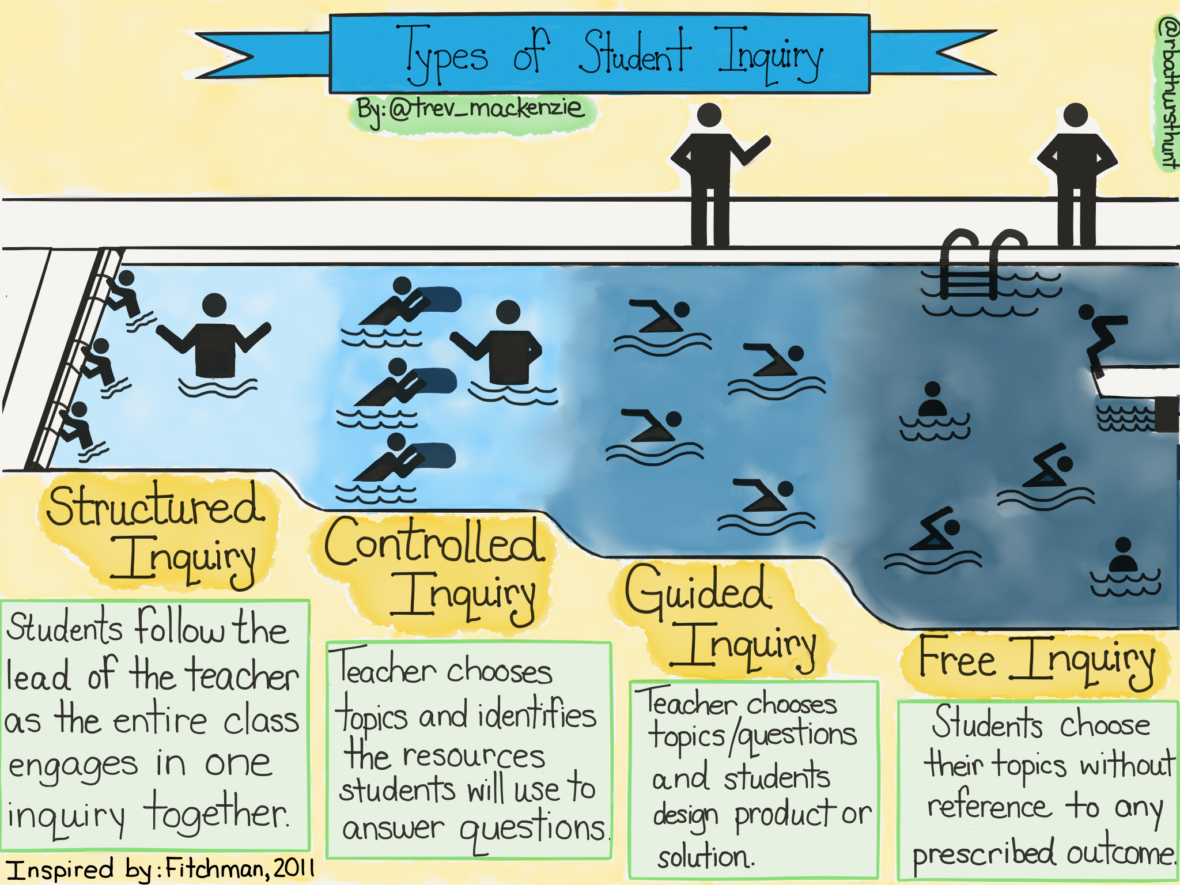

The researchers grouped their volunteers into teams of 7 or 8 students and assigned each group with a type of meal. The goal was that in three weeks, they needed to have analyzed the food classes present in that meal. A CPS module was already developed for this activity and students received a laptop as well as an orientation on how to use the Wiki, learning resources, and problem tasks available on the module. Researchers collect all the discussions on the wiki, student journals, and individual student interviews, then manually coded as one of the following interactions:

Learner – Content: learners engaging with the content.

Learner – Learner: interactions between the students.

Learner – Instructor: interactions with the teacher.

Learner – Interface: interaction with the technology medium.

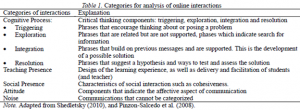

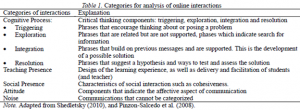

The first three categories were described by Moore & Kearsley (2005), while Learner-Interface was described by Hillman, Willis, & Gunawardena, (1994). DeWitt et al., also looked the at frequency and further categorization of each learner interaction into social and cognitive processes shown in Table 1. Lastly, the researchers employed a pre- and post-test that contained simple, open-ended questions to assess the students’ proficiency in regards to food classes as a result of the CPS module.

Research Findings and Discussions

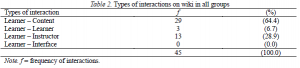

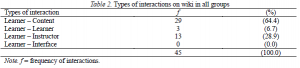

After collecting and coding all communications, DeWitt et al. quantified the frequency of each type of interaction and summarized it in Table 2. They noted the low amount of Learner – Learner and Learner – Interface communication, which they believed was due to discussions occurring outside of the wiki such as in face-to-face meetings between the students. They also pointed to studies by Ertmer et al. (2011) and Huang (2010) who both carried out research on online interactions on Wiki that also saw similar lack of interactions.

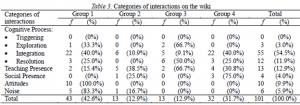

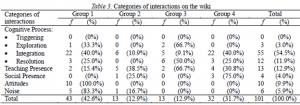

To examine the extent of CPS encouraging social and cognitive processes, the researchers had further divided the communications into categories seen in Table 1 and posted the frequency of those interactions in Table 3. They noted most interactions were cognitive processes with a total of 69.3%, followed by teaching process with 12.9%, and social process of 4%. DeWitt et al., attributed the lack of Triggering and Exploration cognitive processes to students believing the Wiki should contain solutions to the problem, or that they occurred in discussions between face-to-face interactions that were not captured by the researchers.

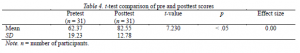

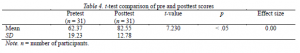

The third aspect of the research looked at effectiveness of the CPS module in learning about food classes, which was conducted by examining students’ pre-test and post-test scores. They found a significant difference between the means of the paired tests, as seen in Table 4. This lead DeWitt’s team to conclude that their study showed that applying the CPS method on Wiki allowed for varying types of interactions, promoted social and cognitive processes in learners, and resulted in an improvement of students’ knowledge.

Applying O’Cathain’s Proposed Framework of Quality

After reading my selected article, I found myself questioning portions of the research process. Thus, I considered re-examining DeWitt’s study under the lens of the proposed Comprehensive Framework by O’Cathain (2010). I applied each domain to the whole study and considered whether I could clearly state the presence of each element within the article. Bolded elements indicated where quality of research was in question.

- Planning Quality

- Foundational element: clearly stated in introduction.

- Rational Transparency: doesn’t explicitly state the type of research being conducted, or why the Mixed Method approach was taken.

- Planning Transparency: purpose of study clearly outlined.

- Feasibility: study could be completed within short timeframe.

- Design Quality

- Design Transparency: design type is known and process was described.

- Design Suitability: mixed method approach may be most convenient, but the qualitative and quantitative elements feels unsuitable. For instance, examining pretest and posttest scores of online CPS module compared to traditional in-person teaching would provide far stronger argument of the difference in effectiveness. Similarly, lack of certain interactions such as learner-learner doesn’t necessarily mean students are interacting face-to-face; actual absence of interaction or interacting with people outside of student group is strong a possibility.

- Design Strength: study was not optimized for breadth as the test scores were analyzed according to standards set by just 2 Biology teachers. Depth of the study in terms of coding interactions into different categories was also prone to bias, as it was done by just 2 researchers.

- Design Rigor: rigor is questionable as the researchers included a “noise” category in interactions. I strongly believe that student comments were not completely without rationale and should be considered as affectionate attitude instead. Furthermore, 3 weeks for collaboration between 31 students without any mention of teambuilding or apparently scaffolding to facilitate collaboration could account for lack of interactions between students.

- Data Quality

- Data Transparency: collection method and data were available.

- Data Rigor: collection of student interactions where not conducted with rigor, particularly in realizing possibility of face-to-face discussions that could not be collected.

- Sample Adequacy: 31 student volunteers were not an adequate sample size, nor representative of a usual classroom dynamic such as including disengaged students.

- Analytic Adequacy: Qualitative aspects of describing student interactions relied on interpretations of just 2 researchers.

- Analytic Integration Rigor: not implemented with rigor as transformation of qualitative data (categorization of comments) into quantitative (frequency) was conducted and checked by just 2 researchers.

- Interpretive Rigor

- Interpretive Transparency: clear which findings came from which method.

- Inference Consistency: some consistency between inference and findings. Although lack of interactions such as learner-learner or learner-interface was not completely adequate. Students may actually be working individually without collaborating, or they do not remember how to contribute to the Wiki page.

- Theoretical Consistency: findings consistent with current knowledge.

- Interpretive Agreement: other likely to reach similar conclusion based on findings.

- Interpretive Distinctiveness: conclusion are more credible than other possibilities.

- Interpretive Efficacy: meta-inferences appropriately incorporates from qualitive and quantitative.

- Interpretive Bias Reduction: bias reduction not taken as research team comprised of staff at same university.

- Interpretive Correspondence: Inferences correspond to purpose of research study.

- Inference Transferability

- Ecological Transferability: difficult to apply findings to other contexts such different subjects, or other settings like schools outside of Malaysia.

- Population Transferability: difficulties to apply findings to other population dynamics such as rural populations lacking internet access, or to regular classroom dynamics with that include students who require learning assistance with Individual Education Plans (IEPs).

- Temporal Transferability: Has potential for further research or future policies.

- Theoretical Transferability: Has potential to be re-assessed using different research method or different tools for analyzing findings.

- Reporting Quality

- Reporting Availability: report assumed to be successfully completed within time and budget.

- Reporting Transparency: report assumed to adhere to Good Reporting of a Mixed Method Study (GRAMMs).

- Yield: report provides worthwhile result compared to two individual studies.

- Synthesizability

- I applied the Mixed Methods Appraisal Tool by Hong et al., (2018) to this article as well.

- Utility Quality

- Findings from article for potential for researchers, educators, and curriculum designers.

To summarize, I had various questions and concerns regarding the chosen article which were clearly highlighted and described in detail when applied to the framework. Had those markers been more noticeable or considered, I believe it would have increased the overall quality of the research. From my perspective, the research by DeWitt et al. is worth re-examining under more stringent conditions. One example was suggested by the article itself, which was to reduce the possible of face-to-face discussions by having students further separated by geographical placements. Another consideration is to offer concurrent classes of in-person versus purely-online CPS module. This would still be feasible to conduct in the same time frame and would reduce confounding variables, such as differences in content, to allow accurate assessment of delivery method. The researchers could also provide more transparency in how they categorized students’ communications, as well as seeking agreement from more than 2 researchers to reduce bias in interpretations.

References

DeWitt, D., Alias, N., Siraj, S., & Spector, J. M. (2017). Educational Technology & Society. 13.

Ertmer, P. A., Newby, T. J., Liu, W., Tomory, A., Yu, J. H., & Lee, Y. M. (2011). Students’ Confidence and Perceived Value for Participating in Cross-Cultural Wiki-Based Collaborations. Educational Technology Research and Development, 59(2), 213–228.

Hillman, D. C. A., Willis, D. J., & Gunawardena, C. N. (1994). Learner interface interaction in distance education: An Extension of contemporary models and strategies for practitioners. The American Journal of Distance Education, 8(2), 30-42.

Hong, Q.N., Pluye, P., Fabregues, S., Bartlett, G., Boardman, F., Cargo, M., Dagenais, P., Gagnon, M-P., Griffiths, F., Nicolau, B., O’Cathain, A., Rousseau, M-C., & Vedel, I., (2018). Mixed Methods Appraisal Tool (MMAT) Version 2018. McGill University, Department of Family Medicine. Retrieved from http://mixedmethodsappraisaltoolpublic.pbworks.com/w/file/fetch/127916259/MMAT_2018_criteria-manual_2018-08-01_ENG.pdf

Huang, W.-H. D. (2010). A Case Study of Wikis’ Effects on Online Transactional Interactions.

Ministry of Education Malaysia (MOE). (2013). The Malaysia education blueprint 2013 –2025: Preschool to post secondary education. Putrajaya, Malaysia: Ministry of Education Malaysia. Retrieved from https://www.ilo.org/dyn/youthpol/en/equest.fileutils.dochandle?p_uploaded_file_id=406

Moore, M., & Kearsley, G. (2005). Distance education: A Systems view stems view (2nd ed.).

Ontario, Canada: Thomson Wadsworth.

O’Cathain, A. (2010). Assessing the Quality of Mixed Methods Research: Toward a Comprehensive Framework. In A. Tashakkori & C. Teddlie, SAGE Handbook of Mixed Methods in Social & Behavioral Research (pp. 531–556). https://doi.org/10.4135/9781506335193.n21